Neural Network Approach

Introduction: The neural network approach is motivated by biological neural networks. Roughly speaking, a neural network is a set of connected input/output units, where each connection has a weight associated with it. Neural networks have several properties that make them popular for clustering. First, neural networks are inherently parallel and distributed processing architectures. Second, neural networks learn by adjusting their interconnection weights so as to best fit the data. This allows them to “normalize” or “prototype” the patterns and act as feature (or attribute) extractors for the various clusters. Third, neural networks process numerical vectors and require object patterns to be represented by quantitative features only. Many clustering tasks handle only numerical data or can transform their data into quantitative features if needed.

The neural network approach to clustering tends to represent each cluster as an exemplar. An exemplar acts as a “prototype” of the cluster and does not necessarily have to correspond to a particular data example or object. New objects can be distributed to the cluster whose exemplar is the most similar, based on some distance measure. The attributes of an object assigned to a cluster can be predicted from the attributes of the cluster’s exemplar.

Self-organizing feature maps (SOMs) are one of the most popular neural network methods for cluster analysis. They are sometimes referred to as Kohonen self-organizing feature maps, after their creator, Teuvo Kohonon, or as topologically ordered maps. SOMs’ goal is to represent all points in a high-dimensional source space by points in a low-dimensional (usually 2-D or 3-D) target space, such that the distance and proximity relationships (hence the topology) are preserved as much as possible. The method is particularly useful when a nonlinear mapping is inherent in the problem itself.

SOMs can also be viewed as a constrained version of k-means clustering, in which the cluster centers tend to lie in a low-dimensional manifold in the feature or attribute space. With SOMs, clustering is performed by having several units competing for the current object. The unit whose weight vector is closest to the current object becomes the winning or active unit. So as to move even closer to the input object, the weights of the winning unit are adjusted, as well as those of its nearest neighbors. SOMs assume that there is some topology or ordering among the input objects and that the units will eventually take on this structure in space. The organization of units is said to form a feature map. SOMs are believed to resemble processing that can occur in the brain and are useful for visualizing high-dimensional data in 2-D or 3-D space.

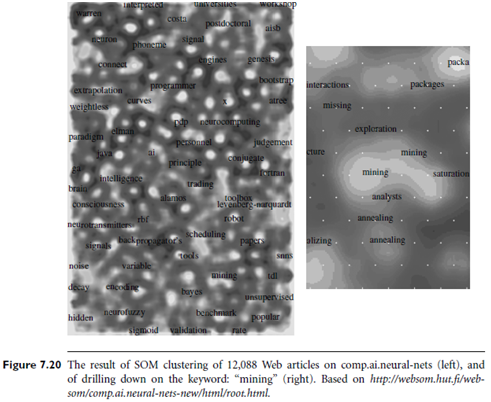

The SOM approach has been used successfully for Web document clustering. The left graph of Figure 7.20 shows the result of clustering 12,088 Web articles from the use net newsgroup comp.ai.neural-nets using the SOM approach, while the right graph of the figure shows the result of drilling down on the keyword: “mining.”

The neural network approach to clustering has strong theoretical links with actual brain processing. Further research is required to make it more effective and scalable in large databases due to long processing times and the intricacies of complex data.