Asymptotic Notation

Asymptotic notation: The notations we use to describe the asymptotic running time of an algorithm are defined in terms of functions whose domains are the set of natural numbers N = {0, 1, 2, ...}. Such notations are convenient for describing the worst-case running-time function T (n), which is usually defined only on integer input sizes. It is sometimes convenient, however, to abuse asymptotic notation in a variety of ways. For example, the notation is easily extended to the domain of real numbers or, alternatively, restricted to a subset of the natural numbers. It is important, however, to understand the precise meaning of the notation so that when it is abused, it is not misused. This section defines the basic asymptotic notations and also introduces some common abuses.

Θ-notation: The worst-case running time of insertion sort is T (n) = Θ(n2). Let us define what this notation means. For a given function g(n), we denote by Θ(g(n)) the set of functions

Θ(g(n)) = {f(n) : there exist positive constants c1, c2, and n0 such that 0 ≤ c1g(n) ≤ f(n) ≤ c2g(n) for all n ≥ n0}.[1]

A function f(n) belongs to the set Θ(g(n)) if there exist positive constants c1 and c2 such that it can be "sandwiched" between c1g(n) and c2g(n), for sufficiently large n. Because Θ(g(n)) is a set, we could write "f(n) ∈Θ(g(n))" to indicate that f(n) is a member of Θ(g(n)). Instead, we will usually write "f(n) = Θ(g(n))" to express the same notion. This abuse of equality to denote set membership may at first appear confusing, but we shall see later in this section that it has advantages.

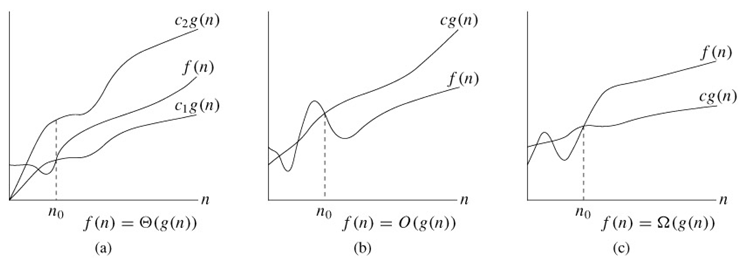

Figure 3.1(a) gives an intuitive picture of functions f(n) and g(n), where we have that f(n) = Θ(g(n)). For all values of n to the right of n0, the value of f(n) lies at or above c1g(n) and at or below c2g(n). In other words, for all n ≥ n0, the function f(n) is equal to g(n) to within a constant factor. We say that g(n) is an asymptotically tight bound for f(n).

Figure 3.1: Graphic examples of the Θ, O, and Ω notations. In each part, the value of n0 shown is the minimum possible value; any greater value would also work. (a) Θ-notation bounds a function to within constant factors. We write f(n) = Θ(g(n)) if there exist positive constants n0, c1, and c2 such that to the right of n0, the value of f(n) always lies between c1g(n) and c2g(n) inclusive. (b) O-notation gives an upper bound for a function to within a constant factor. We write f(n) = O(g(n)) if there are positive constants n0 and c such that to the right of n0, the value of f(n) always lies on or below cg(n). (c) Ω-notation gives a lower bound for a function to within a constant factor. We write f(n) = Ω(g(n)) if there are positive constants n0 and c such that to the right of n0, the value of f(n) always lies on or above cg(n).

The definition of Θ(g(n)) requires that every member f(n) ∈Θ(g(n)) be asymptotically nonnegative, that is, that f(n) be nonnegative whenever n is sufficiently large. (An asymptotically positive function is one that is positive for all sufficiently large n.) Consequently, the function g(n) itself must be asymptotically nonnegative, or else the set Θ(g(n)) is empty. We shall therefore assume that every function used within Θ-notation is asymptotically nonnegative. This assumption holds for the other asymptotic notations defined in this chapter as well.

An informal notion of Θ-notation that amounted to throwing away lower-order terms. Let us briefly justify this intuition by using the formal definition to show that 1/2n2 - 3n = Θ(n2). To do so, we must determine positive constants c1, c2, and n0 such that

c1n2 ≤ 1/2n2 - 3n ≤ c2n2

for all n ≥ n0. Dividing by n2 yields

c1 ≤ 1/2 - 3/n ≤ c2.

The right-hand inequality can be made to hold for any value of n ≥ 1 by choosing c2 ≥ 1/2. Likewise, the left-hand inequality can be made to hold for any value of n ≥ 7 by choosing c1 ≤ 1/14. Thus, by choosing c1 = 1/14, c2 = 1/2, and n0 = 7, we can verify that 1/2n2 - 3n = Θ(n2). Certainly, other choices for the constants exist, but the important thing is that some choice exists. Note that these constants depend on the function 1/2n2 - 3n; a different function belonging to Θ(n2) would usually require different constants.

We can also use the formal definition to verify that 6n3 ≠ Θ(n2). Suppose for the purpose of contradiction that c2 and n0 exist such that 6n3 ≤ c2n2 for all n ≥ n0. But then n ≤ c2/6, which cannot possibly hold for arbitrarily large n, since c2 is constant.

Intuitively, the lower-order terms of an asymptotically positive function can be ignored in determining asymptotically tight bounds because they are insignificant for large n. A tiny fraction of the highest-order term is enough to dominate the lower-order terms. Thus, setting c1 to a value that is slightly smaller than the coefficient of the highest-order term and setting c2 to a value that is slightly larger permits the inequalities in the definition of Θ-notation to be satisfied. The coefficient of the highest-order term can likewise be ignored, since it only changes c1 and c2 by a constant factor equal to the coefficient.