Radix Sort

Radix sort is the algorithm used by the card-sorting machines you now find only in computer museums. The cards are organized into 80 columns, and in each column a hole can be punched in one of 12 places. The sorter can be mechanically "programmed" to examine a given column of each card in a deck and distribute the card into one of 12 bins depending on which place has been punched. An operator can then gather the cards bin by bin, so that cards with the first place punched are on top of cards with the second place punched, and so on.

For decimal digits, only 10 places are used in each column. (The other two places are used for encoding nonnumeric characters.) A d-digit number would then occupy a field of d columns. Since the card sorter can look at only one column at a time, the problem of sorting n cards on a d-digit number requires a sorting algorithm.

Intuitively, one might want to sort numbers on their most significant digit, sort each of the resulting bins recursively, and then combine the decks in order. Unfortunately, since the cards in 9 of the 10 bins must be put aside to sort each of the bins, this procedure generates many intermediate piles of cards that must be kept track of.

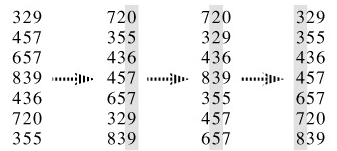

Radix sort solves the problem of card sorting counterintuitively by sorting on the least significant digit first. The cards are then combined into a single deck, with the cards in the 0 bin preceding the cards in the 1 bin preceding the cards in the 2 bin, and so on. Then the entire deck is sorted again on the second-least significant digit and recombined in a like manner. The process continues until the cards have been sorted on all d digits. Remarkably, at that point the cards are fully sorted on the d-digit number. Thus, only d passes through the deck are required to sort. Figure 8.3 shows how radix sort operates on a "deck" of seven 3-digit numbers.

It is essential that the digit sorts in this algorithm be stable. The sort performed by a card sorter is stable, but the operator has to be wary about not changing the order of the cards as they come out of a bin, even though all the cards in a bin have the same digit in the chosen column.

In a typical computer, which is a sequential random-access machine, radix sort is sometimes used to sort records of information that are keyed by multiple fields. For example, we might wish to sort dates by three keys: year, month, and day. We could run a sorting algorithm with a comparison function that, given two dates, compares years, and if there is a tie, compares months, and if another tie occurs, compares days. Alternatively, we could sort the information three times with a stable sort: first on day, next on month, and finally on year.

The code for radix sort is straightforward. The following procedure assumes that each element in the n-element array A has d digits, where digit 1 is the lowest-order digit and digit d is the highest-order digit.

RADIX-SORT(A, d)

1 for i ← 1 to d

2 do use a stable sort to sort array A on digit i

Proof The correctness of radix sort follows by induction on the column being sorted . The analysis of the running time depends on the stable sort used as the intermediate sorting algorithm. When each digit is in the range 0 to k-1 (so that it can take on k possible values), and k is not too large, counting sort is the obvious choice. Each pass over n d-digit numbers then takes time Θ(n k). There are d passes, so the total time for radix sort is Θ(d(n k)).

When d is constant and k = O(n), radix sort runs in linear time. More generally, we have some flexibility in how to break each key into digits.

Proof For a value r ≤ b, we view each key as having d = ⌈b/r⌉ digits of r bits each. Each digit is an integer in the range 0 to 2r - 1, so that we can use counting sort with k = 2r - 1. (For example, we can view a 32-bit word as having 4 8-bit digits, so that b = 32, r = 8, k = 2r - 1 = 255, and d = b/r = 4.) Each pass of counting sort takes time Θ(n k) = Θ(n 2r) and there are d passes, for a total running time of Θ(d(n 2r )) = Θ((b/r)(n 2r)).

For given values of n and b, we wish to choose the value of r, with r ≤ b, that minimizes the expression (b/r)(n 2r). If b < ⌊lg n⌋, then for any value of r b, we have that (n 2r) = Θ(n). Thus, choosing r = b yields a running time of (b/b)(n 2b) = Θ(n), which is asymptotically optimal. If b ≥ ⌊lg n⌋, then choosing r = ⌊lg n⌋ gives the best time to within a constant factor, which we can see as follows. Choosing r = ⌊lg n⌋ yields a running time of Θ(bn/ lg n). As we increase r above ⌊lg n⌋, the 2r term in the numerator increases faster than the r term in the denominator, and so increasing r above ⌊lg n⌋ yields a running time of Θ(bn/ lg n). If instead we were to decrease r below ⌊lg n⌋, then the b/r term increases and the n 2r term remains at Θ(n).

Is radix sort preferable to a comparison-based sorting algorithm, such as quick-sort? If b = O(lg n), as is often the case, and we choose r ≈ lg n, then radix sort's running time is Θ(n), which appears to be better than quicksort's average-case time of Θ(n lg n). The constant factors hidden in the Θ-notation differ, however. Although radix sort may make fewer passes than quicksort over the n keys, each pass of radix sort may take significantly longer. Which sorting algorithm is preferable depends on the characteristics of the implementations, of the underlying machine (e.g., quicksort often uses hardware caches more effectively than radix sort), and of the input data. Moreover, the version of radix sort that uses counting sort as the intermediate stable sort does not sort in place, which many of the Θ(n lg n)-time comparison sorts do. Thus, when primary memory storage is at a premium, an in-place algorithm such as quicksort may be preferable.