Minimal Spanning Tree

MINIMAL SPANNING TREES

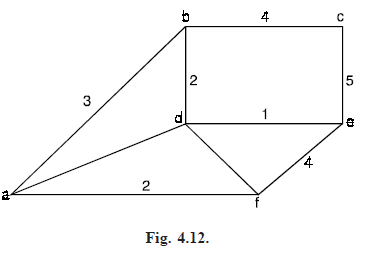

Weighted graph: A weighted graph is a graph G in which each edge e has been assigned a non-negative number w(e), called the weight (or length) of e. Figure (4.12) shows a weighted graph. The weight (or length) of a path in such a weighted graph G is defined to be the sum of the weights of the edges in the path. Many optimisation problems amount to finding, in a suitable weighted graph, a certain type of subgraph with minimum (or maximum) weight.

Minimal spanning tree: Let G be weighted graph. A minimal spanning tree of G is a spanning tree of G with minimum weight. The weighted graph G of Figure (4.12) shows six cities and the costs of laying railway links between certain pairs of cities. We want to set up railway links between the cities at minimum costs.

The solution can be represented by a subgraph. This subgraph must be spanning tree since it covers all the vertices (so that each city is in the road system), it must be connected (so that any city can be reached from any other), it must have unique simple path between each pair of vertices.

Thus what is needed is a spanning tree the sum of whose weights is minimum, i.e., a minimal spanning tree.

Algorithm for minimal spanning tree

There are several methods available for actually finding a minimal spanning tree in a given graph. Two algorithms due to Kruskal and Prim of finding a minimal spanning tree for a connected weighted graph where no weight is negative are presented below. These algorithms are example of greedy algorithms. A greedy algorithm is a procedure that makes an optimal choice at each of its steps without regard to previous choices.

Kruskal’s algorithm: Kruskal’s algorithm for finding a minimal spanning tree :

Input : A connected graph G with non-negative values assigned to each edge.

Output : A minimal spanning tree for G

Let G = (V, E) be graph and S = (Vs, Es) be the spanning tree to be found from G. Let | V | = n and E = {e1, e2, ..... em}. The stepwise algorithm is given below :

Method :

Step 1 : Select any edge of minimal value that is not a loop. This is the first edge of T. If there is more than one edge of minimal value, arbitrarily choose one of these edges. i.e., select an edge e1 from E such that e1 has least weight. Replace E = E – {e1} and Es = {e1}

Step 2 : Select any remaining edge of G having minimal value that does not form a circuit with the edges already included in T. i.e., select an edge ei from E such that ei has least weight and that it does not form a cycle with members of Es. Set E = E – {ei} and Es = Es ∪ {ei}.

Step 3 : Continue step 2 until T contains n – 1 edges, where n is the number of vertices of G. i.e., Repeat step 2 until | Es | = | V | – 1.

Suppose that a problem calls for finding an optimal solution (either maximum or minimum).

Suppose, further, than an algorithm is designed to make the optimal choice from the available data at each stage of the process. Any algorithm based on such an approach is called a greedy algorithm.

A greedy algorithm is usually the first heuristic algorithm one may try to implement and it does lead to optimal solutions sometimes, but not always. Kruskal’s algorithm is an example of a greedy algorithm that does, in fact, lead to an optimal solution.

Theorem . Let G = (V, E) be a loop-free weighted connected undirected graph. Any spanning tree for G that is obtained by Kruskal’s algorithm is optimal.

Proof. Let | V | = n, and let T be a spanning tree for G obtained by Kruskal’s algorithm.

The edges in T are labeled e1, e2, ...... en – 1, according to the order in which they are generated by the algorithm.

For each optimal tree T′ of G, define d(T′) = k if k is the smallest positive integer such that T and T′ both contain e1, e2, .., ek – 1, but ek ∉ T′.

Let T1 be an optimal tree for which d(T1) = r is maximal.

If r = n, then T = T1 and the result follows.

Otherwise, r ≤ n – 1 and adding edge er (of T) to T1 produces the cycle C, where there exists an edge er′ if C that is in T1 but not in T.

Start with tree T1. Adding er to T1 and deleting er′, we obtain a connected graph with n vertices and n – 1 edges.

This graph is a tree, T2. The weights of T1 and T2 satisfy wt(T2) = wt(T1) wt(er) – wt(er′).

Following the selection of e1, e2, ..., er – 1 in Kruskal’s algorithm, the edge er is chosen so that wt(er) is minimal and no cycle results when er is added to the subgraph H of G determined by e1, e2, ..., er – 1.

Since er′ produces no cycle when added to the subgraph H, by the minimality of wt(er) it follows that wt(er′) ≥ wt(er).

Hence wt(er) – wt(er′) ≤ 0, so wt(T2) ≤ wt(T1). But with T1 optimal, we must have wt(T2) = wt(T1), so T2 is optimal.

The tree T2 is optimal and has the edges e1, e2, ..., er – 1, er in common with T, so d(T2) ≥ r 1 > r = d(T1), contradicting the choice of T1.

Consequently, T1 = T and the tree T produced by Kruskal’s algorithm is optimal.