←

Neural Network & Fuzzy Systems

Hidden Layer

Introduction:-Back-propagation is simply a way to determine the error values in hidden layers. This needs be done in order to update the weights.

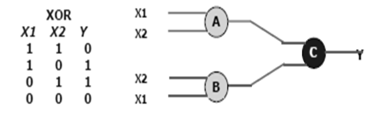

The best example to explain where back-propagation can be used is the XOR problem.

Consider a simple graph shown below.

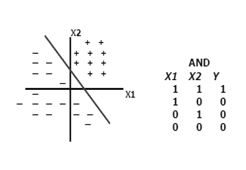

Consider a simple graph shown below.

− All points on the right side of the line are ve, therefore the output of the neuron should be ve.

− All points on the left side of the line are –ve, therefore the output of the neuron should be –ve.

Training a network to operate as an AND switch can be done easily through only one neuron.

But a XOR problem can't be solved using only one neuron. If we want to train an XOR, we need 3 neurons, fully-connected in a feed-forward network as shown below.