Learning By Example

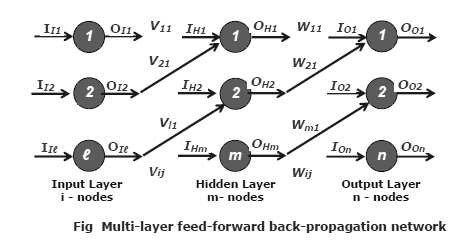

Description:-Consider the Multi-layer feed-forward back-propagation network below. The subscripts I, H, O denotes input, hidden and output neurons. The weight of the arc between ith input neuron to jth hidden layer is Vij .The weight of the arc between ith hidden neuron to j th out layer is Wij

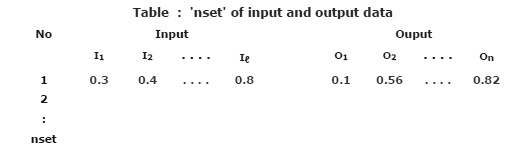

The table below indicates an 'nset' of input and output data. It shows ℓ inputs and the corresponding n output data.

Computation of Input Layers

• Input Layer Computation

Consider linear activation function. If the output of the input layer is the input of the input layer and the transfer function is 1, then

{O}I = {I} I

ℓ x 1 ℓ x 1 (denotes matrix row, column size)

The hidden neurons are connected by synapses to the input neurons.

- Let Vij be the weight of the arc between ith input neuron to jth hidden layer.

- The input to the hidden neuron is the weighted sum of the outputs of the input neurons. Thus the equation

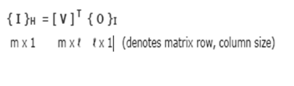

Denotes weight matrix or connectivity matrix between input neurons and a hidden neurons as [ V ].

We can get an input to the hidden neuron as ℓ x m