Ram-based Neurons And Networks

Introduction:-The strong development of digital electronic circuits and boolean algebra were influential in thedevelopment of connectionist models based on the idea of random-access memory (RAM). RAM is a device in which binary information can be stored and retrieved, each of the information elements having its own directly addressable space. The addresses are binary vectors. If n address lines are used, 2" addressable elements can be stored and retrieved, each consisting of m bits.

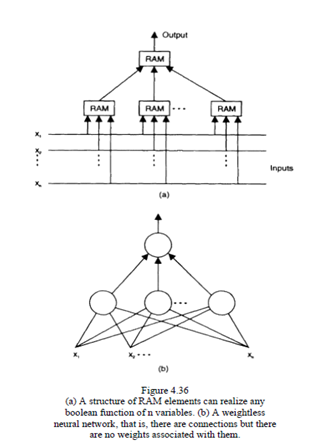

Figure shows a RAM-based structure and its representation as a neural network, where n-inputs, one-output RAM elements are used. Using such a simple structure, different models have been developed and put into practice. They differ in their training algorithms, and application-oriented characteristics.

Using the above idea, so-called weightless neural networks were developed by Igor Aleksander (1989).The connections between neurons do not have real weights; they are binary, {0,1}, that is, a connection either exists or does not exist.

Probabilistic RAM (pRAM) models, developed by John Taylor and his group, use a probability parameter for an output of a RAM-neuron to become 1. These probabilities are learned during training and used during recall. For example, if an input vector 1100011 is supplied at the input of a pRAM, and output of 1 is generated with a probability of 0.7, learned during the training phase. A random number generator is used to generate signals (l's) with a given probability. Different reinforcement learning algorithms have been developed for the pRAM models