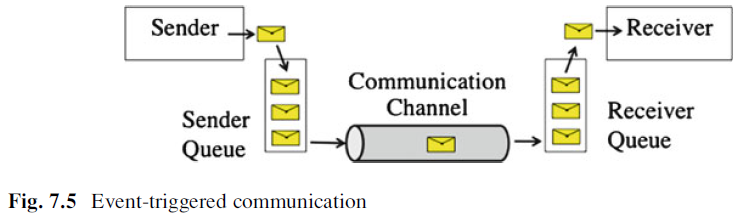

Event-triggered Communication

Event-Triggered Communication: A sender sends a message whenever a significant event (e.g., termination of a task, an interrupt signal, etc.) occurs at the sender. This message is placed in a queue at the sender’s site until the basic message transport service (BMTS) is ready to transport the message to the receiver. The communication channel can be eventriggered, rate-constrained, or time-triggered. After arrival of the message at the receiver, the message is placed in a receiver queue until the receiver consumes the message. Using the CRC field contained in every message, the BMTS checks at the receiver’s site whether the contents of a message have been corrupted during transport and simply discards corrupted messages. From the architectural point of view, a BMTS is characterized by a maximum bandwidth, a transport latency, a jitter, and a reliability for the transport of correct BMTS messages. These transport parameters can be characterized by probability distributions.

Whenever queues are involved in a scenario, the possibility of queue overflow must be considered. Queue overflow will occur if the transmission rate of the sender is larger than the capacity of the network (overflow of the sender’s queue) or the delivery rate of the network is larger than the reception rate at the receiver (overflow of the receiver’s queue). Different event-trigged protocols take different approaches to the handling of queue overflow.

It is impossible to provide temporal guarantees in an open event-triggered communication scenario. If every sending component in an open communication

scenario is autonomous and is allowed to start sending a message at any instant, then it can happen that all sending components send a message to the same receiver at the same instant (the critical instant), thus overloading the channel to the receiver. In fielded communication systems, we find three strategies to handle such a scenario: (1) the communication system stores messages intermediately at a buffer before the receiver, (2) the communication system exerts backpressure on the sender, or (3) or the communication system discards some messages. None of these strategies is acceptable for real-time data.

A lower-level protocol, e.g., a link-level protocol that increases the reliability of a link at the cost of additional jitter, is not directly visible at the BMTS level –

although its effects, the increased reliability and the increased jitter, are reflected in the characterization of the BMTS service. When a BMTS message has been sent Fig. 7.5

across the Internet, we don’t know what types and how many different low-level protocols have been activated.

The implementation of an exactly-once semantics requires a bi-directional information flow between sender and receiver that is not provided at the BMTS level of our model. In our model, the exactly-once semantics must be implemented by a higher-level protocol that uses two or more (from the point of view of the communication service independent) BMTS messages.

Ethernet: Ethernet is the most widely used protocol in the non-real-time world. The original bus-based Ethernet, controlled by the CSMA/CD (carrier sense multiple access/ collision detection) with exponential back-off access control strategy [Met76] has, over the years, morphed into a switched Ethernet configuration with star topology, standardized in IEEE standard 802.3. An Ethernet switch deploys a best-effort flowcontrol strategy with a buffer before the link to the final receiver. If this buffer overflows, further messages to this receiver are discarded. If an exactly-once semantics must be implemented in an Ethernet system, a higher level protocol that uses two or more Ethernet messages must be provided.

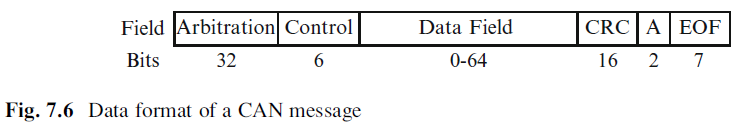

Controller Area Network: The CAN (Controller Area Network) Protocol developed by Bosch [CAN90] is a bus-based CSMA/CA (carrier sense multiple access/collision avoidance) protocol that exercises backpressure flow control on the sender. The CAN message consists of six fields as depicted in Fig. 7.6. The first field is a 32-bit arbitration field that contains the message identifier of 29 bits length. (The original CAN had only a arbitration field of 11 bits, supporting at most 2,024 different message identifiers.) Then there is a 6-bit control field followed by a data field of between 0–64 bits in length. The data in the first three fields are protected by a 16-bit CRC field that ensures a Hamming distance of 6. The fields after the CRC are used for an immediate acknowledgment message.

In CAN, access to the CAN-bus is controlled by an arbitration logic that assumes the existence of a recessive and a dominant state on the bus such that the dominant state can overwrite the recessive state. This requires that the propagation delay of the channel is smaller than the length of a bit-cell of the CAN message. Assume that a 0 is coded into the dominant state and a 1 is coded into the recessive state. Whenever a node intends to send a message, it puts the first bit of the arbitration field (i.e., the message identifier) on the channel. In case of a conflict, the node with a 0 in its first identifier bit wins, and the one with a 1 must back off. This arbitration continues for all bits of the arbitration field. A node with all 0 s always wins – this is the bit pattern of the highest priority message. In CAN, the message identifier thus determines the message priority.

User Datagram Protocol

The user datagram protocol (UDP) is the stateless datagram protocol of the Internet protocol suite. It is an efficient unreliable uni-directional message protocol that requires no set-up of transmission channels and supports multi-casting on a local area network using a best-effort flow-control strategy. Many real-time applications use UDP because the tradeoff between latency and reliability is not hard-wired in UDP (in contrast to the Transmission Control Protocol TCP) but can be performed at the application level, taking account of the application semantics. UDP is also used for multimedia streaming applications.