Introduction To Operating Systems

Introduction: A good real-time operating system not only provides efficient mechanisms and services to carry out good real-time scheduling and resource management policies, but also keeps its own time and resource consumptions predictable and accountable. In this section we'll know that how to implement operating system functions to accomplish these objectives. It then examines how well several widely used real-time operating systems and general-purpose operating systems perform in this respect.

We now switch over to use the terms commonly used in operating system literature. This section adds a few important ones to the terms we used in earlier chapters. We make several assumptions about the implementation of the applications and the architecture of the operating system. These assumptions help keep our discussion concrete. They lead to no loss of generality for most of the chapter.

Threads and Tasks: A thread implements a computation job and is the basic unit of work handled by the scheduler. In earlier chapters, we spoke of the admission of a job (or a task) into the system after an acceptance test; this step encompasses the creation of a thread that implements the job.

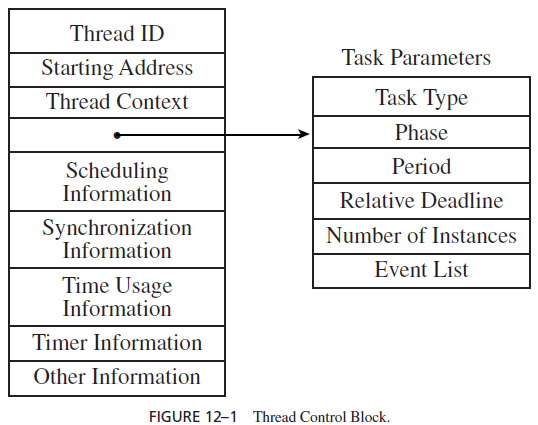

When the kernel creates a thread, it allocates memory space to the thread and brings the code to be executed by the thread into memory. In addition, it instantiates a data structure called the Thread Control Block (TCB) and uses the structure to keep all the information it will need to manage and schedule the thread. Figure 12–1 shows a general structure of thread control blocks. The information kept in the TCB of a thread includes the ID of the thread and the starting address of thread’s code. The context of a thread refers to the values of registers (e.g., program counter and status register) and other violatile data that define the state and environment of the thread. When a thread is executing, its context changes continuously. When the thread stops executing, the kernel keeps its context at the time in the thread’s TCB. We will explain the other types of information later as the need for the information becomes apparent. When we say that the operating system inserts a thread in a queue (e.g., the ready or suspend queue), we mean that it inserts (a pointer to) the TCB of the thread into a linked list of TCBs of other threads in that queue. The kernel destroys a thread by deleting its TCB and deallocating its memory space.

Periodic Threads. A periodic (computation) task is a thread that executes periodically. It is clearly inefficient if the thread is created and destroyed repeatedly every period. In an operating system that supports periodic tasks (e.g., Real-Time Mach [ToNR] and EPIQ [DLZS]), the kernel reinitializes such a thread and puts it to sleep when the thread completes. The kernel keeps track of the passage of time and releases (i.e., moves to the ready queue) the thread again at the beginning of the next period. We call such a thread a periodic thread.

The parameters of a periodic thread include its phase (i.e., the interval between its creation and its first release time), period, relative deadline, and the number of instances. A periodic thread with a finite number of instances terminates and may be destroyed by the kernel after it has executed for the specified number of times. These parameters are given by the application2 when its requests the creation of the periodic thread. They are kept in the TCB of the thread.

Most commercial operating systems do not support periodic threads.We can implement a periodic task at the user level as a thread that alternately executes the code of the task and sleeps until the beginning of the next period. (In other words, the thread does its own reinitialization and keeps track of time for its own next release.)

Aperiodic, Sporadic, and Server Threads. Analogously, we can implement a sporadic or aperiodic task as a sporadic thread or aperiodic thread that is released in response to the occurrence of the specified types of events. The events that cause the releases of these threads occur sporadically and may be triggered by external interrupts. Upon its completion, a sporadic thread or aperiodic thread is also reinitialized and suspended. We assumed this implementation of aperiodic (and sporadic) tasks in Figures 5–7 and 5–10, which describe a cyclic executive in a multithreaded time-driven system. An aperiodic task is the same as a periodic task, except for the types of events that causes their releases.

We call a thread that implements a bandwidth-preserving server or a slack stealer a server thread. In Scheduling Aperiodic and Sporadic Jobs we talked about a “server queue.” Such a queue is simply a list of pointers which give the starting addresses of functions to be executed by the server thread. Each aperiodic (or sporadic) job is the execution of one of these functions. Upon the occurrence of an event that triggers the release of an aperiodic job, the event handler (frequently an interrupt service routine) inserts into this list a pointer to the corresponding function. Thus, the aperiodic job is “released” and queued. When the server is scheduled, it executes these functions in turn. In our discussion of bandwidth-preserving servers, we assume that this is how aperiodic and sporadic jobs are implemented.

Major States. Our subsequent discussion focuses primarily on priority-driven systems. We will mention five major states of a thread.

• Sleeping: A periodic, aperiodic, or server thread is put in the sleeping state immediately after it is created and initialized. It is released and leaves the state upon the occurrence of an event of the specified types. Upon the completion of a thread that is to execute again, it is reinitialized and put in the sleeping state. A thread in this state is not eligible for execution.

• Ready: A thread enters the ready state after it is released or when it is preempted. A thread in this state is in the ready queue and eligible for execution.

• Executing: A thread is the executing state when it executes.

• Suspended (or Blocked): A thread that has been released and is yet to complete enters the suspended (or blocked) state when its execution cannot proceed for some reason. The kernel puts a suspended thread in the suspended queue.

• Terminated: A thread that will not execute again enters the terminated state when it completes. A terminated thread may be destroyed.

You recall from our earlier discussion that a job (and hence a thread) can be suspended or blocked for many reasons. For example, it may be blocked due to resource-access control; it may be waiting to synchronize its execution with some other thread(s); it may be held waiting for some reason (e.g., I/O completion and jitter control), and so on. A bandwidthpreserving server thread enters the suspended state when it has no budget or no aperiodic job to execute. The operating system typically keeps separate queues for threads suspended or blocked for different reasons (e.g., a queue for threads waiting for each resource). For the sake of simplicity, we call them collectively the suspended queue. Similarly, the kernel usually keeps a number of ready queues. For example, to support fixed-priority scheduling, there may be a queue for ready threads of each priority level. We often call all these queues collectively the ready queue.