The Kernel

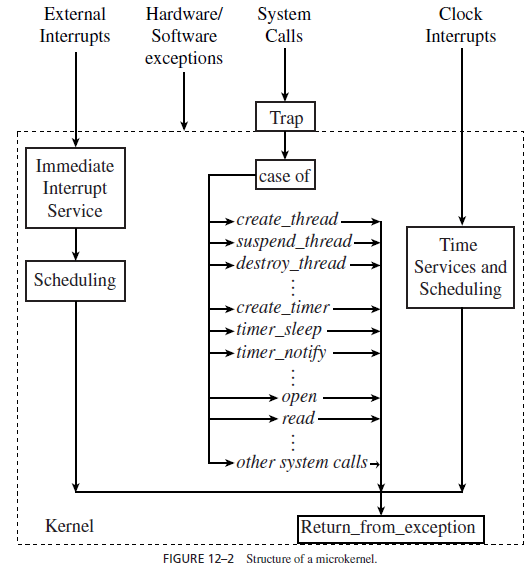

The Kernel: Again, with a few exceptions, a real-time operating system consists of a microkernel that provides the basic operating system functions described below. Figure 12–2 shows a general structure of a microkernel. There are three reasons for the kernel to take control from the executing thread and execute itself: to respond to a system call, do scheduling and service timers, and handle external interrupts. The kernel also deals with recovery from hardware and software exceptions, but we ignore those activities here.

System Calls. The kernel provides many functions which, when called, do some work on behalf of the calling thread. An application can access kernel data and code via these functions. They are called Application Program Interface (API) functions. Figure 12–2 lists several examples.

A system call is a call to one of the API functions. In a system that provides memory protection, user and kernel threads execute in separate memory spaces. Upon receiving a system call, the kernel saves the context of the calling thread and switches from the user mode to the kernel mode. It then picks up the function name and arguments of the call from the thread’s stack and executes the function on behalf of the thread. When the system call completes, the kernel executes a return from exception. As a result, the system returns to the user mode. The calling thread resumes if it still has the highest priority. If the system call causes some other thread to have the highest priority, then that thread executes. We have just described what happens when a thread makes a synchronous system call. The calling thread is blocked until the kernel completes the called function. When the call is asynchronous (e.g., in the case of an asynchronous I/O request), the calling thread continues to execute after making the call. The kernel provides a separate thread to execute the called function.

Many embedded operating systems do not provide memory protection; the kernel and user execute in the same space. Reasons for this choice are the relative trustworthiness of embedded applications and the need to keep overhead small. (The extra memory space needed to provide full memory protection is on the order of a few kilobytes per process. This overhead is more serious for small embedded applications than the higher context-switch overhead that also incurs with memory protection.) In such a system, a system call is just like a procedure or function call within the application.

Figure 12–2 shows examples of thread management functions: create thread, suspend thread, resume thread and destroy thread.4 The timer functions listed below them exemplify the time services a real-time operating system provides. From the user’s point of view, a (software) timer is an object used to keep track of time. In addition to systemwide timers, most operating systems allow threads (or processes) to have their own timers. A per thread (or per process) timer is created by the kernel on behalf of a thread (or process) when the thread calls the create timer function. In a system that contains more than one clock, the calling thread specifies the clock to which the timer is to be bound; by this, we mean that the timer will keep track of time according to that clock. A clock is a hardware device that contains a counter. At any time, the content of the counter gives a representation of the current time.

A set-timer function call specifies the ID of the timer to be set and an expiration time. By calling this function, a thread asks the kernel to carry out an action at the timer expiration time. The action may be the execution of a specified function, or the waking up of a suspended thread, or the placement of a message in a message queue, and so on. We say that a timer event occurs at the timer’s expiration time; when a timer event occurs, the kernel carries out the specified action. As a consequence, the calling thread carries out its own action or synchronizes with other threads at the specified time. In a system that supports periodic tasks, the kernel uses such a function to take care of the releases of periodic thread instances. Wewill return to discuss clocks and timers in more detail in Section 12.2.1.

Time Services and Scheduling. The scheduler is a central part of the kernel. In most operating systems, the scheduler executes periodically, as well as whenever the state of any thread changes. To trigger the scheduler into action, the system clock device raises interrupts periodically. A clock interrupt refers to an interrupt from the device. In Section 6.8.5, which describes how to determine the schedulability of applications that run on an operating system whose scheduler only executes periodically, we called the period of clock interrupts the tick size. The tick size used by most operating systems is 10 milliseconds.5 At each clock interrupt, the kernel does the following chores.

1. Processes timer events: A clock device has a timer queue. The pending expiration times of all the timers that are bound to the clock are stored in time order in this queue. By checking this queue, the kernel can determine whether some timer events have occurred since the previous time it checked the queue. This is what the kernel does first when servicing a clock interrupt. As we said earlier, when the kernel finds that a timer event did occur, it is to carry out a specified action. The kernel processes in turn all the timer events that have occurred and queues the specified actions. It carries out the actions before returning control to the user.

2. Updates execution budget: Most real-time operating systems (including all Real-Time POSIX-compliant systems) provide the user with the choice of round-robin or FIFO scheduling of threads of equal priority. (These policies are called SCHED RR and SCHED FIFO, respectively.) To schedule equal-priority threads in a round-robin manner, the scheduler gives such a thread a time slice when it schedules the thread for execution. We can think of the time slice as the execution budget of the executing thread. At each clock interrupt, the scheduler decrements the budget of the thread by the tick size. If the thread is not complete when the budget (i.e., its remaining time slice) becomes 0, the kernel decides to preempt the thread. The same mechanism can be used for the FIFO policy. The scheduler simply gives a thread that chooses the FIFO policy an infinite time slice or does not decrement the budget of such a thread, thus making the thread nonpreemptable by equal-priority threads.

3. Updates the ready queue and returns control: As a result of the above actions, some threads may become ready (e.g., released upon timer expiration) and the thread that was executing at the time of the clock interrupt may need to be preempted. The scheduler updates the ready queue accordingly and then gives control to the thread at the head of the highest priority queue.

In addition, the kernel may also update monitoring information, such as interval timers which keep track of the total processor time consumption of the thread and the time spent by the kernel on behalf of the thread.

We can see from what it does at each clock interrupt that the periodical execution of the scheduler is necessary. However, if the scheduler executes only at clock interrupts, the responsiveness and schedulability of the system depend critically on the tick size. The typical 10-millisecond tick size is sufficiently short for round-robin scheduling of time-shared applications but may severely degrade the schedulability of applications with stringent timing requirements. A smaller tick size can improve the system in this respect but means more frequent service of clock interrupts and a higher scheduling overhead. For this reason, most operating systems do not rely on tick scheduling (sometimes called time-based scheduling) alone. Rather, it is used in combination with event-driven scheduling. The kernel invokes the scheduler to update the ready queue whenever it wakes up or releases a thread, finds a thread unblocked, or creates a new thread, and so on. Thus a thread is placed in the proper place in the ready queue as soon as it becomes ready.