The Least Square Formula

Introduction: Regression is a family of curve-fitting methods for predicting average response performance for new combinations of factors and understanding which factor changes cause changes in average outputs. Regression methods are perhaps the most widely used statistics or operations research techniques.

The Least Squares Formula:

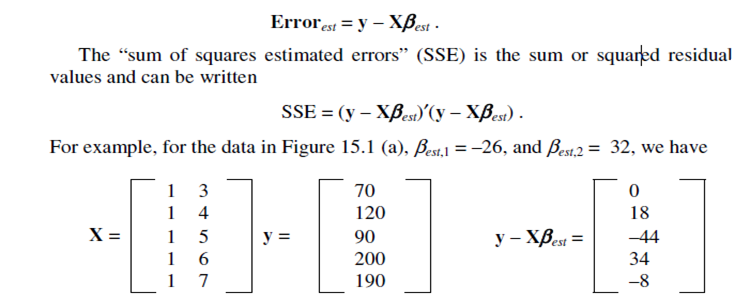

It is an interesting fact that the residuals for all observations can be written in vector form as follows. “y” is a column of responses, “X” is the design matrix for fitted model based on the data, and “Errorest” is a vector of the residuals. Then, in vector form, we have

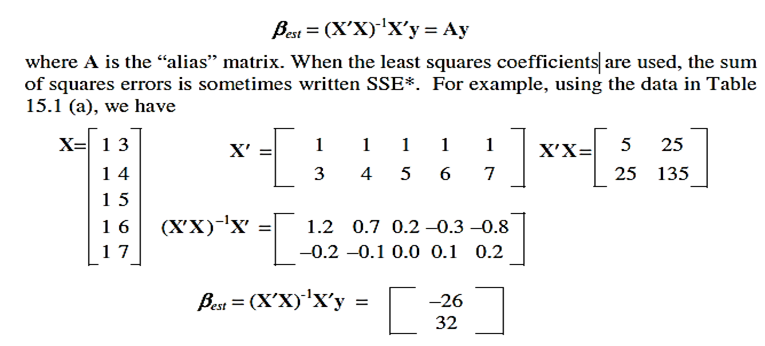

· This approach can derive settings for more complicated cases involving multiple factors and/or fitting model forms including second and third order terms. Mimizing the SSE is much like minimizing c bβ aβ 2 by changing β. Advanced readers will note that the condition that a is non-negative for a unique minimum is analogous to the condition that X′X is positive semi definite. If a is non-negative, the solution to the easier problem is β = –½ × b ÷ a. The solution to the least squares curve fitting problem is

which gives the same prediction model as was estimated by eye and SSE* = 3480.