Heteroassociation And Analogies To Neural Data Storage

Description:-Hetroassociation is the variant for a dynamic energy surface: Here, the appearance of the energy surface depends on the current state and we receive a heteroassociator instead of an autoassociator. For a heteroassociator

a(p ϵ) = p

Is no longer true, but rather

h(p ϵ) = q,

Which means that a pattern is mapped onto another one. h is the heteroassociative mapping. Such heteroassociations are achieved by means of an asymmetric weight matrix V.Heteroassociations connected in series of the form

h(p ϵ) = q

h(q ϵ) = r

h(r ϵ) = s

...

h(z ϵ) = p

Can provoke a fast cycle of states

p →q →r →s →. . . →z →p,

Where by a single patter n is never completely accepted. Before a pattern is entirely completed, the heteroassociation already tries to generate the successor of this pattern. Additionally, the network would never stop, since after having reached the last state z, it would proceed to the first state p again.

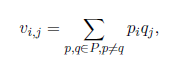

Learning rule for the heteroassociative matrix: -For two training samples p being predecessor and q being successor of a heteroassociative transition the weights of the heteroassociative matrix V result from the learning rulewith several heteroassociations being introduced into the network by a simple addition.

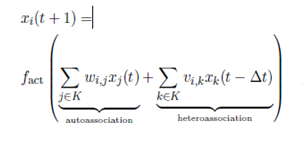

Stabilizing the heteroassociations:-The problem that the patterns are not completely generated but that the next pattern is already beginning before the generation of the previous pattern is finished. This problem can be avoided by not only influencing the network by means of the heteroassociative matrix V but also by the already known auto associative matrix W. Additionally, the neuron adaptation rule is changed so that competing terms are generated: One term auto associating an existing pattern and one term trying to convert the very same pattern into its successor. The associative rule provokes that the network stabilizes a pattern, remains there for a while, goes on to the next pattern, and so on.

Stabilizing the heteroassociations:-The problem that the patterns are not completely generated but that the next pattern is already beginning before the generation of the previous pattern is finished. This problem can be avoided by not only influencing the network by means of the heteroassociative matrix V but also by the already known auto associative matrix W. Additionally, the neuron adaptation rule is changed so that competing terms are generated: One term auto associating an existing pattern and one term trying to convert the very same pattern into its successor. The associative rule provokes that the network stabilizes a pattern, remains there for a while, goes on to the next pattern, and so on.

Here, the value Δt causes, the influence of the matrix V to be delayed, since it only refers to a network being Δt versions behind. The result is a change in state, during which the individual states are stable for a short while. If Δt is set to, for example, twenty steps, then the asymmetric weight matrix will realize any change in the network only twenty steps later so that it initially works with the auto associative matrix (since it still perceives the predecessor pattern of the current one), and only after that it will work against it.

Biological motivation of heterassociation :-From a biological point of view the transition of stable states into other stable states is highly motivated: At least in the beginning of the nineties it was assumed that the Hopfield model.Another example is the phenomenon that one cannot remember a situation, but the place at which one memorized it the last time is perfectly known. If one returns to this place, the forgotten situation often comes back to mind.