Image Processing

Three main tasks in image processing, where connectionist methods can be successfully applied are:-

(1) Image compression and restoration,

(2) Feature extraction, and

(3) Image classification.

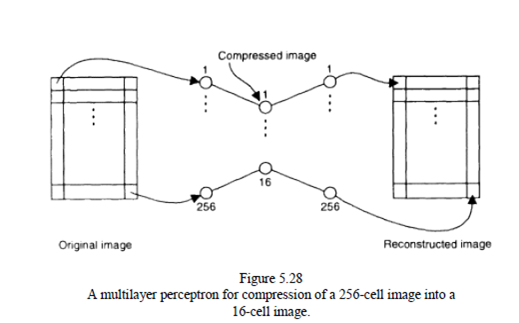

Bart Kosko (1992) shows that competitive learning techniques lead to similar, even slightly better, image compression when compared with mathematical transformations. A satisfactory restoration was achieved when the 256 x 256 black-and-white images was compressed and represented by 0.5 bit/pixel. Modified SOM can also be used for imagecompression. Other connectionist methods for image compression use MLP with the backpropagation algorithm. The ability of the hidden layer to capture unique representation of the input vectors is exploited here. The hidden layer does the compression. The hidden layer in this case performs a principal component analysis. An MLP with n inputs and n outputs is trained with the same patterns for inputs and outputs, where n is the dimension of the input vectors.

Restoration of an image is done after transmitting the activation values of the neurons in the hidden layer. A small simplified network of 256 inputs, 16 hidden nodes, and 256 outputs. The compression here is 0.5 bit/pixel, when one byte is assumed to represent the activation level of a hidden neuron. Better quality of the restored images can be achieved with the use of larger networks, and possibly, structured multinetwork systems, where one neural network is used for compression of only a portion of the original image.

Another well-explored problem in image processing is features extraction. Features, such as contours, lines, curves, corners, junctions, roofs, ramps, and so on, can be extracted from an original image. For many image-processing systems these features are enough to classify the image or to apply successfully other processing methods. Connectionist models can be used for different types of feature extraction, such as those that are region-based, where areas of images with homogeneous properties are found in terms of boundaries; edge-based, where the local discontinuities are detected first and then curves are formed; and pixel-based, which classify pixels based on gray levels.