Limitations To Using The Hopfield Network

1. Memory capacity. The number m of training patterns should be about the number of neurons n, or less (according to Hopfield, it should be less than 0.15 n; according to some new results, m £ 0.5n/log n). This means that the memorizing capacity of a Hopfield network is severely limited. Catastrophic “forgetting" may occur if we try to memorize more patterns than the network is supposed to handle.

2. Discrepancy limitation. The new pattern to be recognized as one of the training patterns should not differ from any training pattern by more than about 25%.

2. Discrepancy limitation. The new pattern to be recognized as one of the training patterns should not differ from any training pattern by more than about 25%.

3. Orthogonality between patterns. The more orthogonal (dissimilar) the training patterns, the better the recognition.

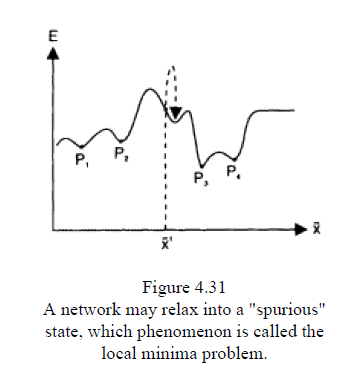

4. Spurious states of attraction. Sometimes the network learns some patterns (creates basins of attraction) called spurious states, which are not presented in the set of training patterns. An example ofsuch a state is shown in figure 4.31. In order to overcome this limitation, Hopfield et al. (1983) introduced a "little bit of unlearning" for every learning pattern:

Spurious states may be useful, if the Hopfield network is used for partial matching and approximate reasoning (see NPS chapter 6). The input values are between 0 and 1 and represent the certainty of the facts or fuzzy membership degrees.

5. Weight symmetry.The weight matrix has to be symmetrical in order for the network to reach an equilibrium. The symmetrical synaptic weights are not at all biologically plausible, but they are a useful limitation here.

6· Local minima problem.A major disadvantage of the Hopfield network is that it can rest in a local minimum state instead of a global minimum energy state, thus associating a new input pattern with a spurious state.Hopfield networks are useful for many applications, including pattern recognition, finite state automaton realization, and implementation of Al-reasoning machines.