Introduction To Learning

Introduction to Learning: Machine Learning is the study of how to build computer systems that adapt and improve with experience. It is a subfield of Artificial Intelligence and intersects with cognitive science, information theory, and probability theory, among others.

Classical AI deals mainly with deductive reasoning, learning represents inductive reasoning. Deductive reasoning arrives at answers to queries relating to a particular situation starting from a set of general axioms, whereas inductive reasoning arrives at general axioms from a set of particular instances.

Classical AI often suffers from the knowledge acquisition problem in real life applications where obtaining and updating the knowledge base is costly and prone to errors. Machine learning serves to solve the knowledge acquisition bottleneck by obtaining the result from data by induction.

Machine learning is particularly attractive in several real life problem because of the following reasons:

- Some tasks cannot be defined well except by example

- Working environment of machines may not be known at design time

- Explicit knowledge encoding may be difficult and not available

- Environments change over time

- Biological systems learn

Recently, learning is widely used in a number of application areas including,

- Data mining and knowledge discovery

- Speech/image/video (pattern) recognition

- Adaptive control

- Autonomous vehicles/robots

- Decision support systems

- Bioinformatics

- WWW

Formally, a computer program is said to learn from experience E with respect to some class of tasks T and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E.

Thus a learning system is characterized by:

- task T

- experience E, and

- performance measure P

Examples: Learning to play chess

T: Play chess

P: Percentage of games won in world tournament

E: Opportunity to play against self or other players

Learning to drive a van

T: Drive on a public highway using vision sensors

P: Average distance traveled before an error (according to human observer)

E: Sequence of images and steering actions recorded during human driving.

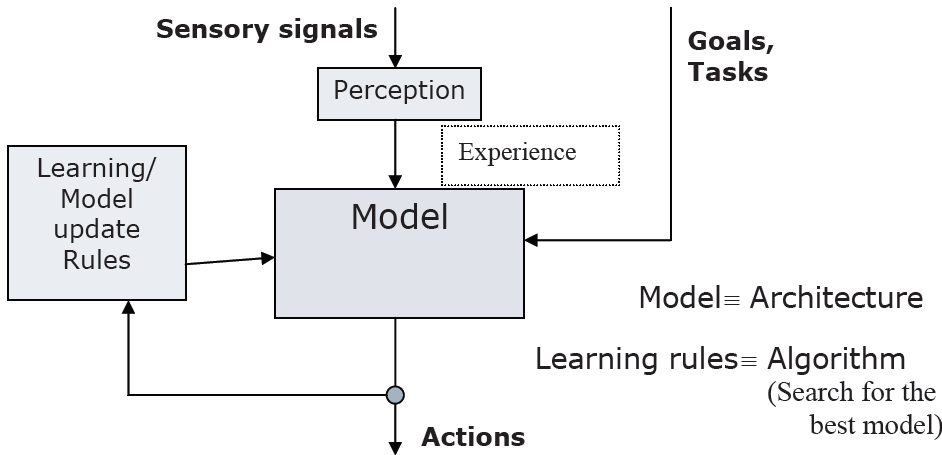

The block diagram of a generic learning system which can realize the above definition is shown below:

As can be seen from the above diagram the system consists of the following components:

- Goal: Defined with respect to the task to be performed by the system

- Model: A mathematical function which maps perception to actions

- Learning rules: Which update the model parameters with new experience such that the performance measures with respect to the goals is optimized

- Experience: A set of perception (and possibly the corresponding actions)